For a while I wanted to experiment with audio visualisation in the browser, with the new functionality in Chrome. My graduation project concerned the other way around, namely the sonification of the dom of any webpage. To create this demo I mainly used, some html5rocks info

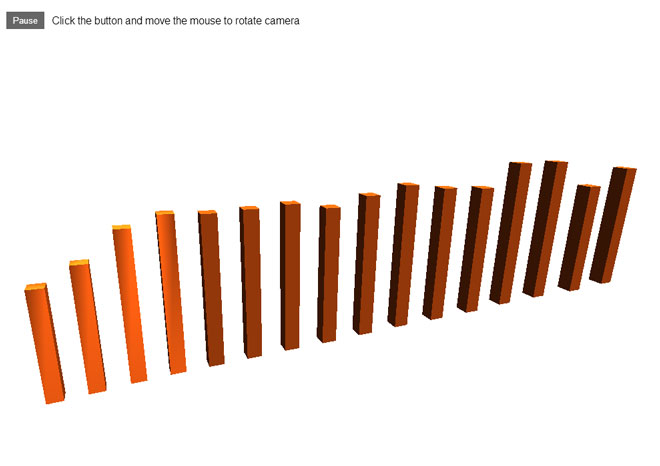

Example

Parts explained

The workhorse of the visualisation is the “webkitAudioContext” currently available in Chrome. With the webkitAudioContext it is possible to create an audio analysis. The following snippet is the setup for the analysis .

// create the analyser

var context = new webkitAudioContext();

var analyser = context.createAnalyser();

//initialize

function onLoad(e) {

startThreeJs();

var source = context.createMediaElementSource(audio);

source.connect(analyser);

analyser.connect(context.destination);

rafCallback();

}

window.addEventListener('load', onLoad, false);

The rafCallback function is where the visualisation happens, the data of the audio is stored in an bytearray every available frame. For this visualisation I only used 15 ‘frequency’ bars to keep the animation smooth, for real purposes you should use the whole spectrum.

// bind the audio data to the web gl cubes

function rafCallback(time) {

window.requestAnimationFrame(rafCallback);

var freqByteData = new Uint8Array(analyser.frequencyBinCount);

analyser.getByteFrequencyData(freqByteData);

for (var j = 0; j < MAX_BAR; j++) {

var magnitude = freqByteData[j];

var delta = magnitude / 100;

cubes[j].position.y = cubesPos[j] + delta * 0.25;

cubes[j].scale.y = delta;

// categorize color on amplitude

var color = 0xf5a711;

if( delta > 0 && delta < 1 ){

color = 0xf5a711;

}else if( delta > 1 && delta < 2 ){

color = 0xf55c11;

}else{

color = 0xcd0505;

}

cubes[j].material.color.setHex( color );

}

}

Feel free to use and abuse the source